Alexandre Deiss & Guillaume Merle & Marc Demoustier & Quoc Duong Nguyen

February 1st, 2022

What is music source separation?

Music source separation is the task of decomposing music into its constitutive components.

What is music source separation?

Four stems: vocals, drums, bass and the remaining other instruments.

Dataset:

MUSBD18HQ

- 150 uncompressed stereo tracks

- Format: .wav

- Sampling rate: 44.1kHz

- 4 stems

State of the art

Waveform

Operates directly on the raw input waveform

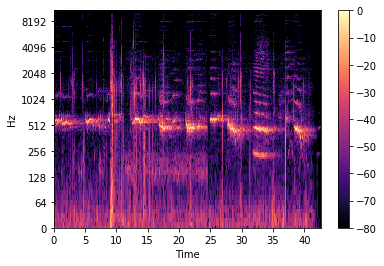

Spectrogram

Visual representation of the spectrum of frequencies of a signal as it varies with time.

Open-Unmix

- Spectrogram domain

- Favored simplicity over performance

- Fast prediction

Demucs

- Demucs V2:

- Waveform domain

- Demucs V3:

- Hybrid (Waveform + Spectrogram)

- Both are complex models

- Slow prediction

- One of the best metric scores

Our approaches

Scrapped idea

- Waveform domain

- Inspired by Demucs V2

But...

- Too long to train

- Not really innovative

Adopted idea

- Inference on PyTorch Mobile

- Using Demucs or Open-Unmix

- Directly download music from YouTube

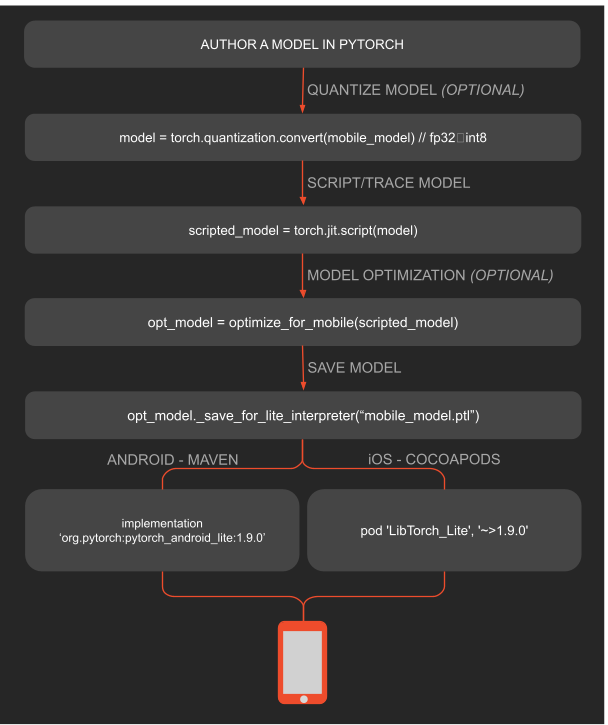

Converting model into TorchScript

What's a TorchScript?

- Serializable and optimizable models from PyTorch code

- Can do mobile optimization

- Only way to use PyTorch on Mobile

Workflow

Model creation

- Make specific changes to the model to be TorchScript-able

- Get pretrained weights

Model chosen

- Open-Unmix was selected

- Smaller model

- Faster prediction

- Most of the preprocessing is in the model

- Demucs is TorchScript-able, but:

- Too complex

- Too slow on mobile

- A lot of preprocessing had to be implemented on mobile

Quantization (Optional)

- Converts 32-bit floating numbers in the model parameters to 8-bit integers.

- Reduces model size

- Reduces memory footprint

- Reduces prediction time

- Open-Unmix only uses linear and LSTM layers so dynamic quantization is used

Quantization tradeoff

- Quantized umxhq model is around 2.3x faster than the base model

- Quantized umxl model is around 3.4x faster than the base model

- A tiny bit less accurate

Model is ready

- Applied PyTorch mobile optimizations

- Automatic pipeline to create and upload the models (https://github.com/demixr/openunmix-torchscript/releases/latest)

Mobile application

Made with Flutter

- Flutter is an open-source UI software development kit created by Google

- Used to develop cross platform applications for Android, iOS, Linux, Mac, Windows, Google Fuchsia, Web platform, and the web from a single codebase

- Compatible with platform native code:

- Android: Java / Kotlin

- IOS: Swift / Objective-C

Mobile inference in Java

- Inference by chunks to prevent memory overflow

- Resample audio from any sampling rate to the model sampling rate (44.1kHz) using Oboe (C++)

- Convert mono files to stereo

- Re-organize model input and output

- Save output files in .wav format

App design

Made with Flutter

Tons of features!

- Choose between models (umxhq & umxl)

- Download music from YouTube

- Import music from the device (mp3 and wav)

- Source separation in 4 different stems

- Local library of unmixed songs

- Integrated music player with the ability to mute / unmute each stem

- More to come!

Performance

Using a Google Pixel 6, demixing a 4-minute file takes:

- 3 minutes using the quantized umxhq model.

- 4 minutes 10 seconds using the quantized umxl model.

Get Demixr now

Demo

References